How to A/B Test your Online Forms and Checkouts

Learn how to run A/B tests on your web forms to improve their conversion rate performance

Zuko's advice on how to successfully experiment with your form

Optimizing your web form or checkouts often starts with identifying and fixing the “big” issues; the blatant mistakes that are driving your users to abandon unnecessarily and are negatively impacting your conversion rate. Form analytics tools such as Zuko are great for swiftly pinpointing the key problems and prompting a fix.

At this stage many companies rub their hands, say “job done” and move onto the next item on their problem list. This is a missed opportunity. Even though the major flaws in a form may have been remedied, there is still huge potential for improvement in the conversion rate through a properly run experiment or A/B testing programme.

This article provides a condensed overview of how you can get A/B testing set up on your forms and how to evaluate its effectiveness.

What is A/B Testing and how does it work?

A/B testing (sometimes known as split testing) is a technique which compares two variants of a website, form or other piece of marketing material to evaluate whether one performs better than the other.

It involves running the two variants simultaneously in a live environment with users being shown one of the variants at random and the results being determined by actual behaviour (such as conversion or progression rate) with the goal to identify a “winning” variant which can then be shown to all site visitors. An important factor is the use of statistical analysis to determine whether the results can be considered significant or whether the performance of the “winning” and “losing” variants falls within a margin of error.

Interestingly (for testing geeks like us anyway), the first recorded A/B testing is purported to have taken place in the 18th century to prove that citrus juice is an effective remedy for scurvy.

There you have it - A/B testing saves lives!

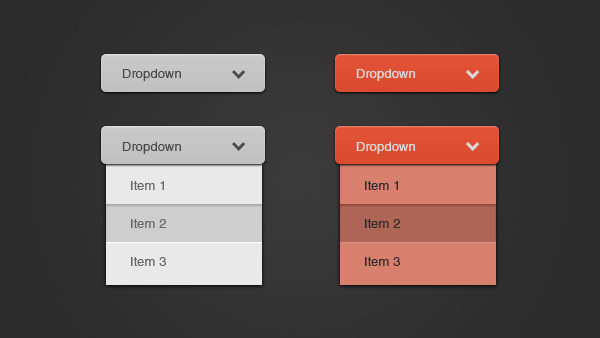

As a starter, take a look at an example test that we ran during our Formisimo days. Which of these variants do you think performed the best? Answer at the end of the article (no early peeking!)

If you like this little quiz, we recommend you also visit Guess the Test which has a whole database of similar challenges.

Why A/B Test your Forms?

The quick answer is that it allows you to start improving the conversion rate of your forms immediately.

A more involved response would add that A/B testing takes the guesswork and opinion out of your form and UX design. It enables you to truly understand what the factors are that drive user conversion or abandonment and apply this learning to your form flow to continually optimize it.

Stage 1 - Configure your data and testing platforms

In order to confidently draw conclusions from your testing, you need to make sure you have the right tools. Firstly, to properly A/B test a form (as opposed to more general website or software testing services), you’ll need to have a form analytics platform to understand what is going on at a field level. Of course, we’re biased in favour of Zuko but there are other tools out there.

Some companies might try to skimp at this stage by just tracking the overall form conversion rate based on the conversion and visitor data in a general analytics package but this would be a mistake. If you don’t understand what users are doing within the form, how can you draw accurate conclusions on what changes to make?

The second thing you will need is an A/B testing or experiment platform. These essentially sit behind your website allowing you to set up and run the tests, randomly diverting your web traffic to the variants being tested. Although there are a lot of software packages in the market, you should make sure that they are compatible with your form analytics provider. Whilst Zuko is able to pull in data from all the major tools, we have direct integrations with Google Optimize, Convert and Optimizely so these are amongst our favourites.

Stage 2 - Create your hypothesis

Good practice is to define a hypothesis that you want to test against the status quo to see if it makes a difference. This will be along the lines of “If we made change X, our conversion rate will increase”.

Having said this, you shouldn’t just pull these hypotheses out of thin air - that would be inefficient and you’d probably not be testing the changes with the largest potential impact. You should study your form analytics data to identify problem areas and use it to generate hypotheses around areas of high possible improvement.

While every form is different, you may want to consider these areas for testing:

Call to Action - Does altering the submit button (colour, label, etc) make users more likely to progress?

Instructions - Will changing the label or instructions asking help? This study suggests that it might.

Individual fields - Does removing a particular field improve (or reduce) conversion rates.

Error Messages - Changing an error message’s copy, location or trigger can reduce abandonment on that field. If you are still using error messages like the below you may want to trial something more helpful.

Form Structure - Will breaking the form into multiple sections be more effective than a single page?

Validation - Adding inline validation or changing the trigger timing may smooth the user experience. This classic study showed a 22% increase in successful submissions with such validation.

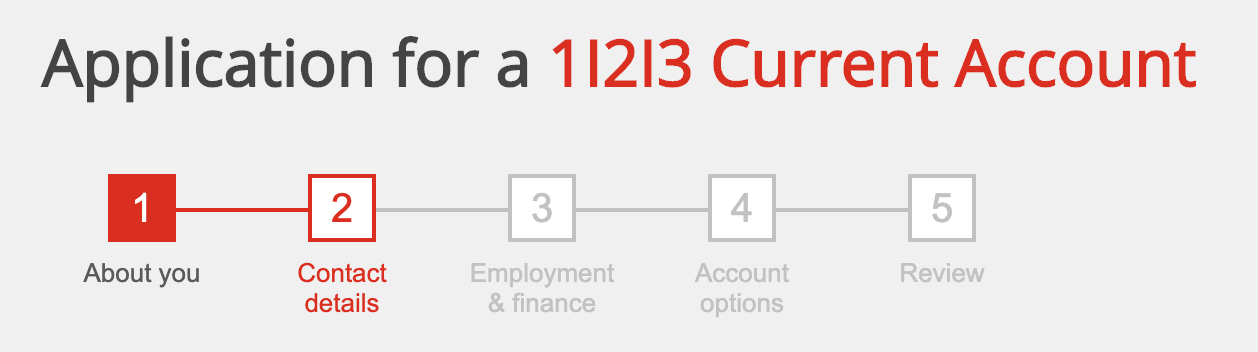

Progress Indicators - Subtle nudges showing user progress can be surprisingly effective although this study shows they can be a double edged sword if not implemented correctly.

Save Button - Would allowing the user to save their inputs in a long form mean they are less likely to drop out?

Payment methods - Is the way you are asking for payment putting users off? Experiment with different integrations and wording to see if you can improve.

Social Proof - Will adding a bit of urgency or evidence of happy customers make the user more likely to commit?

Form Placement - Will changing the location of the form encourage more people to start it?

Privacy Copy - Are users scared off by the small print? CXL quotes a study showing a 19% drop in conversions for a certain privacy copy formulation.

Stage 3 - Implement the experiment

Now you have your hypotheses, it’s time to select one of them and start the A/B testing process. Whilst doing so, you should make sure you follow these key points:

Only test one variable at a time - The eagle eyed among you may have spotted that the Formisimo example we gave above had multiple changes (form title, button colour, confirm password field). This was a mistake. While we had a pretty clear test “winner”, we could not be certain exactly what had made the difference. We’ve learnt from this and so should you. Only make one change at a time.

Be clear on the metric you are testing - What is your KPI? Is it the overall form conversion, or is it the abandonment rate on a particular field? Once you have agreed this, make sure you stick with it and use it as the determinant for the test winner.

Create one “challenger” variation and compare to the “control” status quo - While you can go crazy and create multiple new variations, you really need to know how changes perform Vs what you have at the moment. It’s generally best to just create one new variant based on your hypothesis for comparison.

Set sample size and randomisation rules - In order for conclusions to be meaningful, you need to have a large enough sample size of user visits (if you are unfamiliar with the concept of statistical significance you should read this HBR article). You don't need a PHD in statistics to work out the right number. Your A/B testing platform should be able to set the correct level you need. Alternatively, you can read one of the many relevant articles to work it out yourself or use a rough and ready calculator.

Go, Go, Go - Set your test running. Don’t be tempted to peek at it until you have reached the necessary sample size. The most embarrassing thing for a conversion rate optimizer is to prematurely announce the result of a test only to find the result has changed once the sample size is significant.

Stage 4 - Gather the results and check significance

Once you’ve reached the appropriate sample size you are permitted to see how the test went! Your initial instinct will be to look at the test metric and see which variant “won”. Don’t get too carried away though. You still need to determine whether the difference in the delta between the two variants is statistically significant enough to be meaningful. A small difference may not be conclusive.

Again, your A/B testing tool should make this explicit for you or you can confirm it for yourself. Speero has a great A/B test calculator that you can use for this.

Stage 5 - Make relevant changes

So, the results are in. What now? Simply;

If the result showed the challenger variant performed significantly better than the control variant, make the change permanent for all form users.

If the challenger variant is significantly worse than the control, make no changes.

If the difference is not significant you have choices:

- Make no changes (as there is no evidence there will be an improvement).

- Make the change permanent (no evidence that there will be a negative impact on performance).

- Evolve the test and re-run. For example, if your hypothesis is that a change in error message will reduce abandonment but the test results are inconclusive you may want to try a further version of the error copy.

Stage 6 - Rinse & Repeat

You’ve just successfully completed your A/B test, made the changes to your form and communicated it to the rest of the team. It’s now time to put your feet up and bask in the reflected glory of your relative genius isn’t it?

No?

Of course not. It's time to push on further with more tests to continually improve. Companies with constant experimentation programmes will generally reap the rewards - Bing boosts revenues per search by 10-25% per year by experimentation alone. Don’t rest on your laurels, tee up your next test and get it live.

Summary and Epilogue

Hopefully, the above will have taken you through the rough process to get your A/B testing process up and running and convinced you of its merits.

For more top tips on how to continually improve the performance of your forms or checkouts take a look at our Big Guide to Form Optimization and Analytics.

Ta ta for now….

Oh.

You want to know which variant won our test? Go on then.

As you may well have guessed, Variant B was the clear winner, delivering 56% more conversions than Variant A. As we mentioned above, though, we made a booboo in not testing only one variable. While we strongly suspect that the removal of the Confirm Password requirement drove the improved performance, we can’t definitively prove it. Don’t make the same mistake as us!

Happy testing.

We wrote the book on form optimization!

"The best book on form design ever written - 80 pages of PURE GOLD"

More from our blog:

Want to get started with Zuko?

Start a free trial that includes all features, or request a demo